TiDB Development Guide

About this guide

- The target audience of this guide is TiDB contributors, both new and experienced.

- The objective of this guide is to help contributors become an expert of TiDB, who is familiar with its design and implementation and thus is able to use it fluently in the real world as well as develop TiDB itself deeply.

The structure of this guide

At present, the guide is composed of the following parts:

- Get started: Setting up the development environment, build and connect to the tidb-server, the subsections are based on an imagined newbie user journey.

- Contribute to TiDB helps you quickly get involved in the TiDB community, which illustrates what contributions you can make and how to quickly make one.

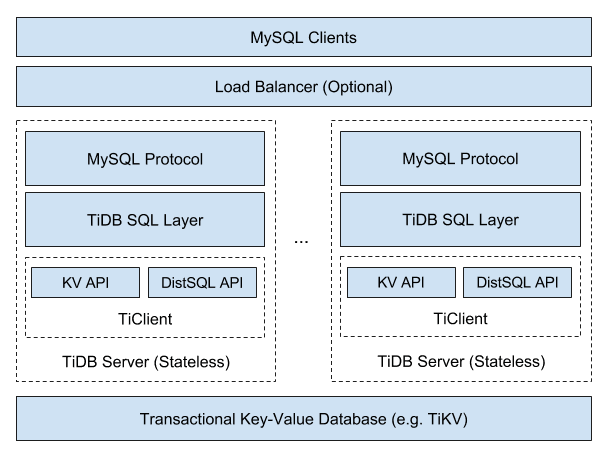

- Understand TiDB: helps you to be familiar with basic distributed database concepts, build a knowledge base in your mind, including but not limited to SQL language, key components, algorithms in a distributed database. The audiences who are already familiar with these concepts can skip this section.

- Project Management: helps you to participate in team working, lead feature development, manage projects in the TiDB community.

Contributors ✨

Thanks goes to these wonderful people (emoji key):

This project follows the all-contributors specification. Contributions of any kind welcome!

Get Started

Let's start your TiDB journey! There's a lot to learn, but every journey starts somewhere. In this chapter, we'll discuss:

- Install Golang

- Get the code, build and run

- Setup an IDE

- Write and run unit tests

- Debug and profile

- Run and debug integration tests

- Commit code and submit a pull request

Install Golang

To build TiDB from source code, you need to install Go in your development environment first. If Go is not installed yet, you can follow the instructions in this document for installation.

Install Go

TiDB periodically upgrades its Go version to keep up with Golang. Currently, upgrade plans are announced on TiDB Internals forum.

To get the right version of Go, take a look at the go.mod file in TiDB's repository. You should see that there is a line like go 1.21 (the number may be different) in the first few lines of this file. You can also run the following command to get the Go version:

curl -s -S -L https://github.com/pingcap/tidb/blob/master/go.mod | grep -Eo "\"go [[:digit:]]+(\.[[:digit:]]+)+\""

Now that you've got the version number, go to Go's download page, choose the corresponding version, and then follow the installation instructions.

Manage the Go toolchain using gvm

If you are using Linux or MacOS, you can manage Go versions with Go Version Manager (gvm) easily.

To install gvm, run the following command:

curl -s -S -L https://raw.githubusercontent.com/moovweb/gvm/master/binscripts/gvm-installer | sh

Once you have gvm installed, you can use it to manage multiple different Go compilers with different versions. Let's install the corresponding Go version and set it as default:

TIDB_GOVERSION=$(curl -s -S -L https://github.com/pingcap/tidb/blob/master/go.mod | grep -Eo "\"go [[:digit:]]+(\.[[:digit:]]+)+\"" | grep -Eo "[[:digit:]]+\.[[:digit:]]+(\.[[:digit:]]+)?")

gvm install go${TIDB_GOVERSION}

gvm use go${TIDB_GOVERSION} --default

Now, you can type go version in the shell to verify the installation:

go version

# Note: In your case, the version number might not be '1.21', it should be the

# same as the value of ${TIDB_GOVERSION}.

#

# OUTPUT:

# go version go1.21 linux/amd64

In the next chapter, you will learn how to obtain the TiDB source code and how to build it.

If you encounter any problems during your journey, do not hesitate to reach out on the TiDB Internals forum.

Get the code, build, and run

Prerequisites

git: The TiDB source code is hosted on GitHub as a git repository. To work with the git repository, please installgit.go: TiDB is a Go project. Therefore, you need a working Go environment to build it. See the previous Install Golang section to prepare the environment.gcc:gcccommand is required to usecgowhile building. To installgcc, search for appropriate install guide for your OS.mysqlclient (optional): After building TiDB from source, you can use the official MySQL client to connect to TiDB. It is not required if you want to build TiDB only.

Note:

TiDB could compile and run on Windows 10. However, it is not expected to be deployed on Windows, where you might encounter many compatibility problems. To have a better experience, we recommend you install WSL2 first.

Clone

Clone the source code to your development machine:

git clone https://github.com/pingcap/tidb.git

Build

Build TiDB from the source code:

cd tidb

make

Run

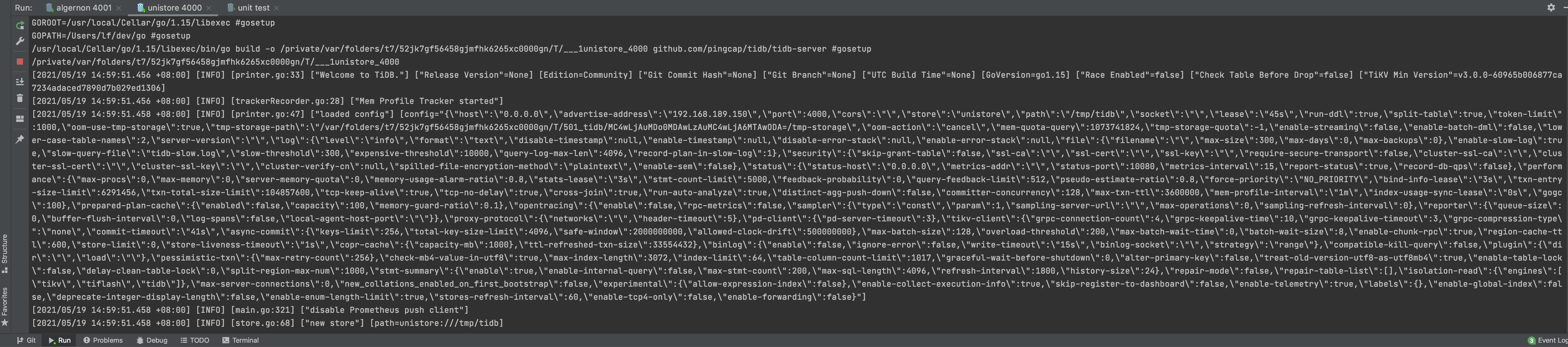

Now that you have the tidb-server binary under the bin directory, execute it for a TiDB server instance:

./bin/tidb-server

This starts the TiDB server listening on port 4000 with embedded unistore.

Connect

You can use the official MySQL client to connect to TiDB:

mysql -h 127.0.0.1 -P 4000 -u root -D test --prompt="tidb> " --comments

where

-h 127.0.0.1sets the Host to local host loopback interface-P 4000uses port 4000-u rootconnects as root user (-pnot given; the development build has no password for root.)-D testuses the Schema/Database test--prompt "tidb> "sets the prompt to distinguish it from a connection to MySQL--commentspreserves comments like/*T

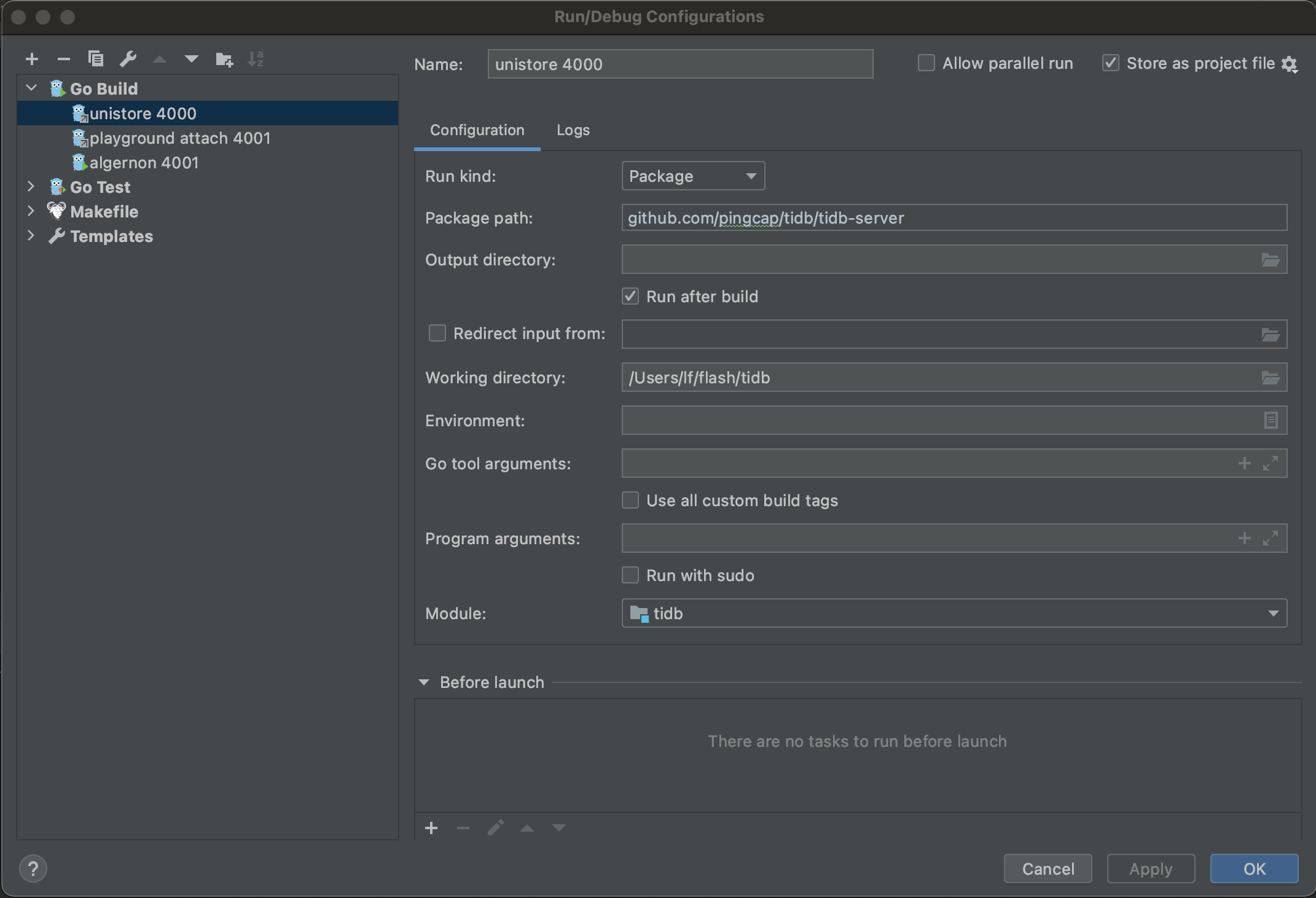

Populate run configurations

Under the root directory of the TiDB source code, execute the following commands to add config files:

mkdir -p .idea/runConfigurations/ && cd .idea/runConfigurations/

cat <<EOF > unistore_4000.xml

<component name="ProjectRunConfigurationManager">

<configuration default="false" name="unistore 4000" type="GoApplicationRunConfiguration" factoryName="Go Application">

<module name="tidb" />

<working_directory value="\$PROJECT_DIR\$" />

<kind value="PACKAGE" />

<filePath value="\$PROJECT_DIR\$" />

<package value="github.com/pingcap/tidb/cmd/tidb-server" />

<directory value="\$PROJECT_DIR\$" />

<method v="2" />

</configuration>

</component>

EOF

cat <<EOF > playground_attach_4001.xml

<component name="ProjectRunConfigurationManager">

<configuration default="false" name="playground attach 4001" type="GoApplicationRunConfiguration" factoryName="Go Application">

<module name="tidb" />

<working_directory value="\$PROJECT_DIR\$" />

<parameters value="--path=127.0.0.1:2379 --store=tikv --status=10081 -P 4001 " />

<kind value="PACKAGE" />

<filePath value="\$PROJECT_DIR\$/cmd/tidb-server/main.go" />

<package value="github.com/pingcap/tidb/cmd/tidb-server" />

<directory value="\$PROJECT_DIR\$" />

<method v="2" />

</configuration>

</component>

EOF

cat <<EOF > unit_test.xml

<component name="ProjectRunConfigurationManager">

<configuration default="false" name="unit test" type="GoTestRunConfiguration" factoryName="Go Test">

<module name="tidb" />

<working_directory value="\$PROJECT_DIR\$" />

<go_parameters value="-race -i --tags=intest,deadlock" />

<framework value="gocheck" />

<kind value="DIRECTORY" />

<package value="github.com/pingcap/tidb" />

<directory value="\$PROJECT_DIR\$/pkg/planner/core" />

<filePath value="\$PROJECT_DIR\$" />

<pattern value="TestEnforceMPP" />

<method v="2" />

</configuration>

</component>

EOF

Now, confirm there are three config files:

ls

# OUTPUT:

# playground_attach_4001.xml

# unistore_4000.xml

# unit_test.xml

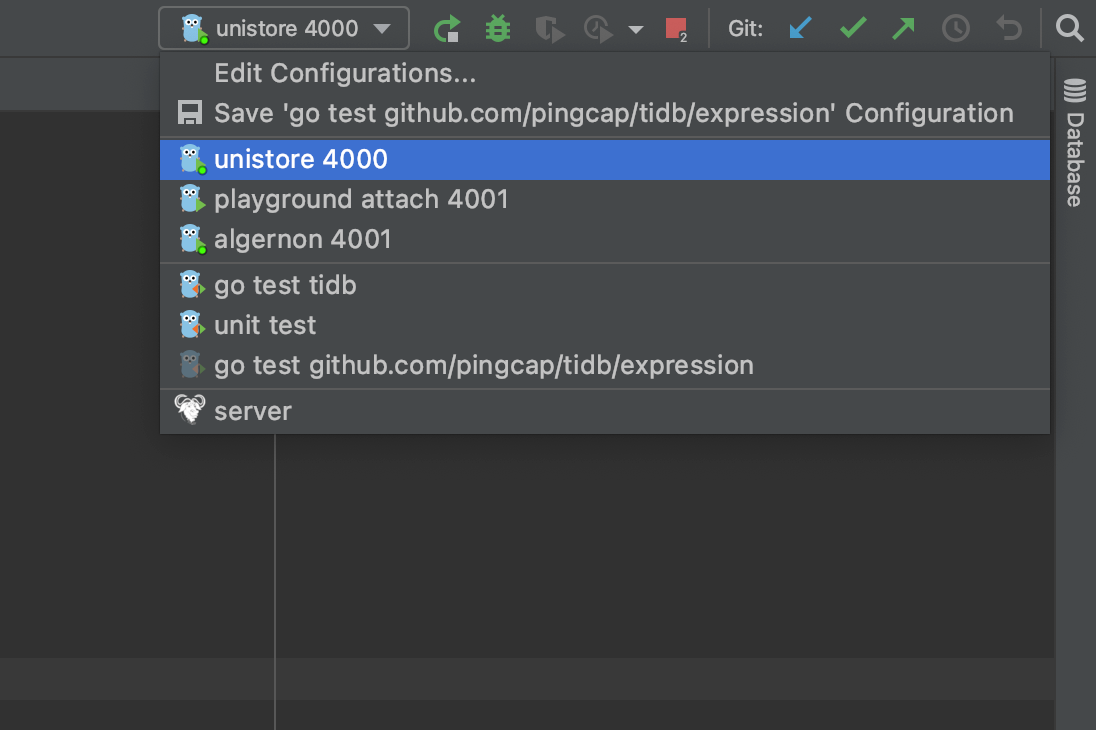

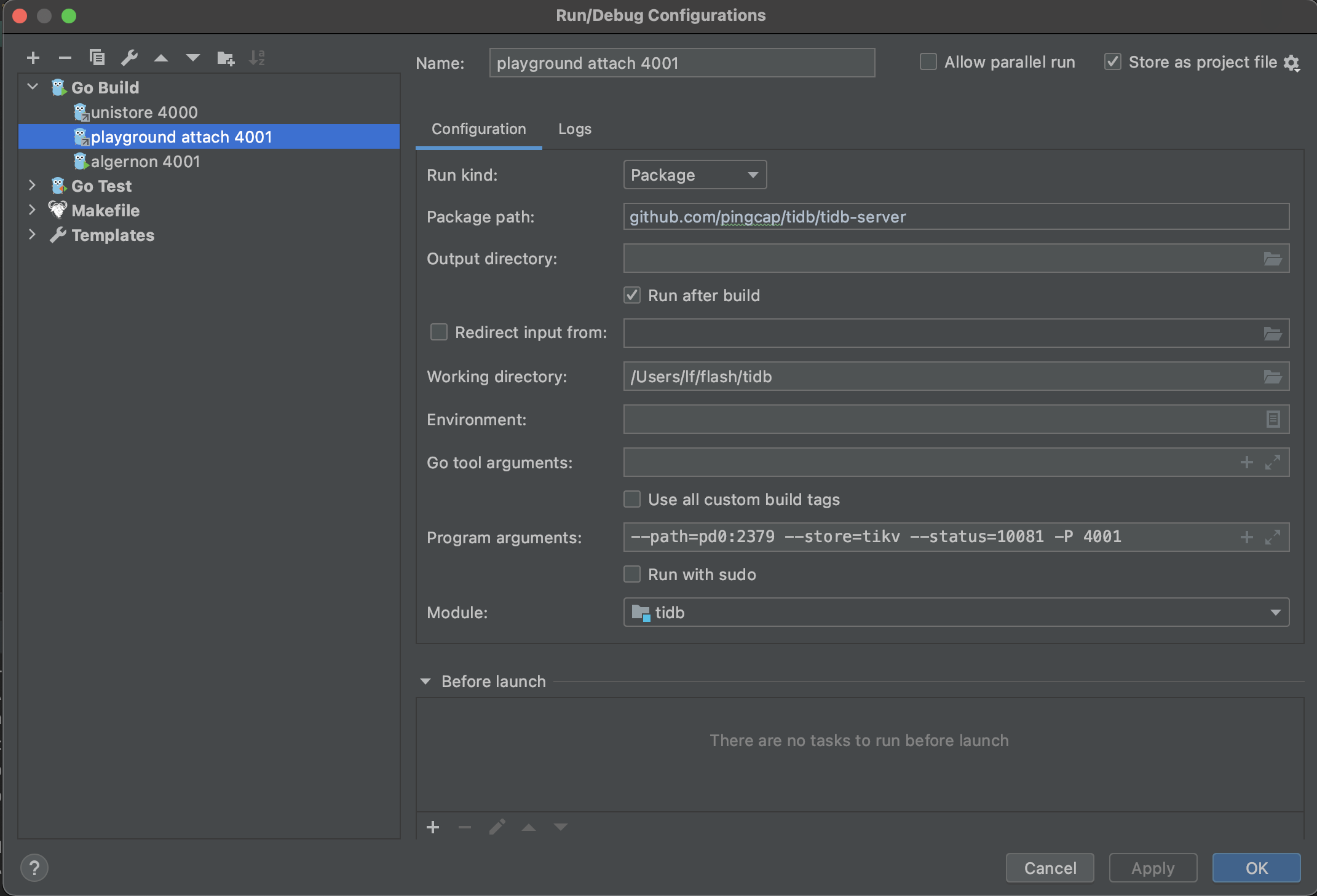

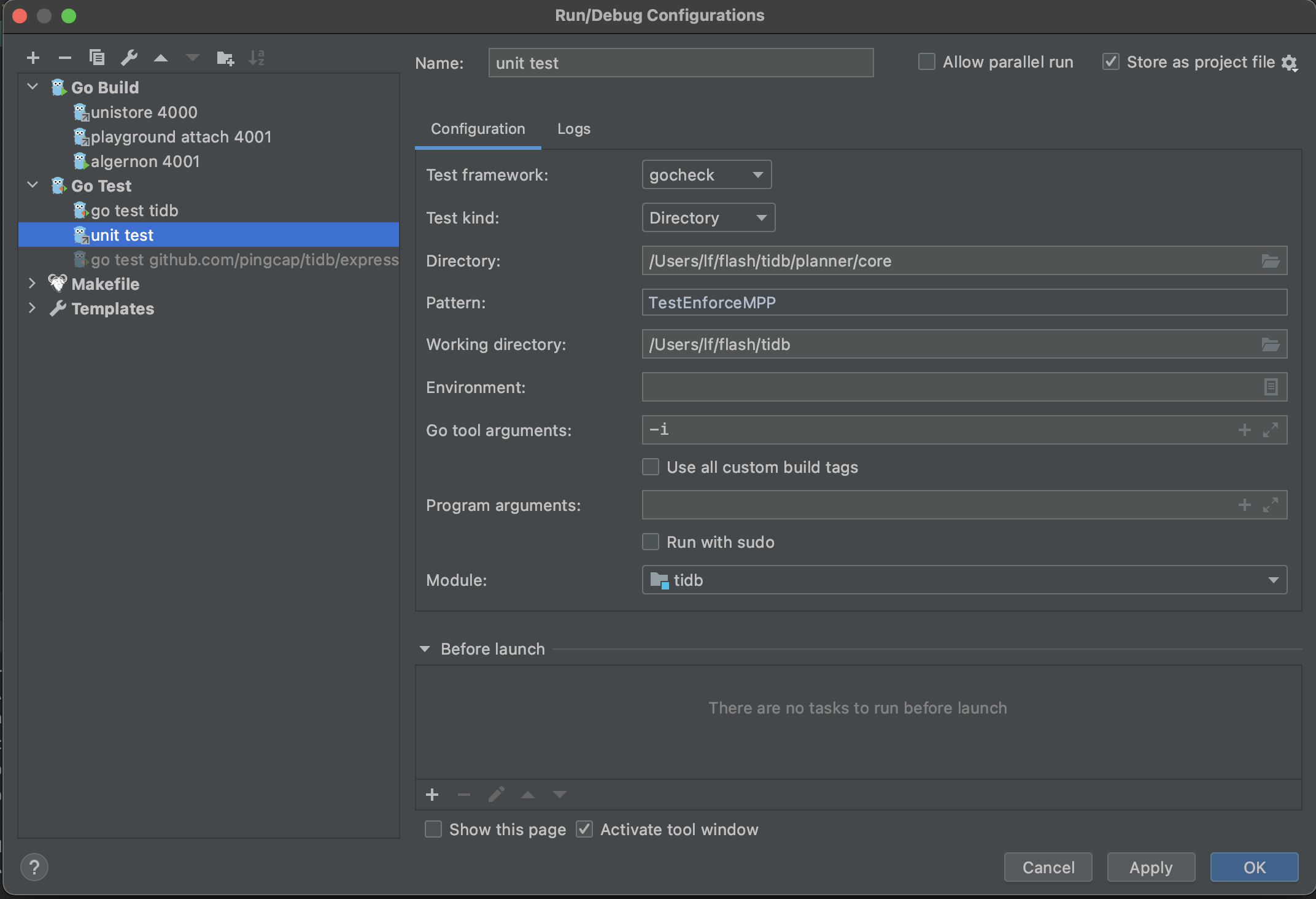

Run or debug

Now you can see the run/debug configs right upper the window.

The first config is unistore 4000, which enables you to run/debug TiDB independently without TiKV, PD, and TiFlash.

The second config is playground attach 4001, which enables you to run/debug TiDB by attaching to an existing cluster; for example, a cluster deployed with tiup playground.

After the server process starts, you can connect to the origin TiDB by port 4000, or connect to your TiDB by port 4001 at the same time.

The third config is unit test, which enables you to run/debug unit tests. You may modify the Directory and Pattern to run other tests.

If you encounter any problems during your journey, do not hesitate to reach out on the TiDB Internals forum.

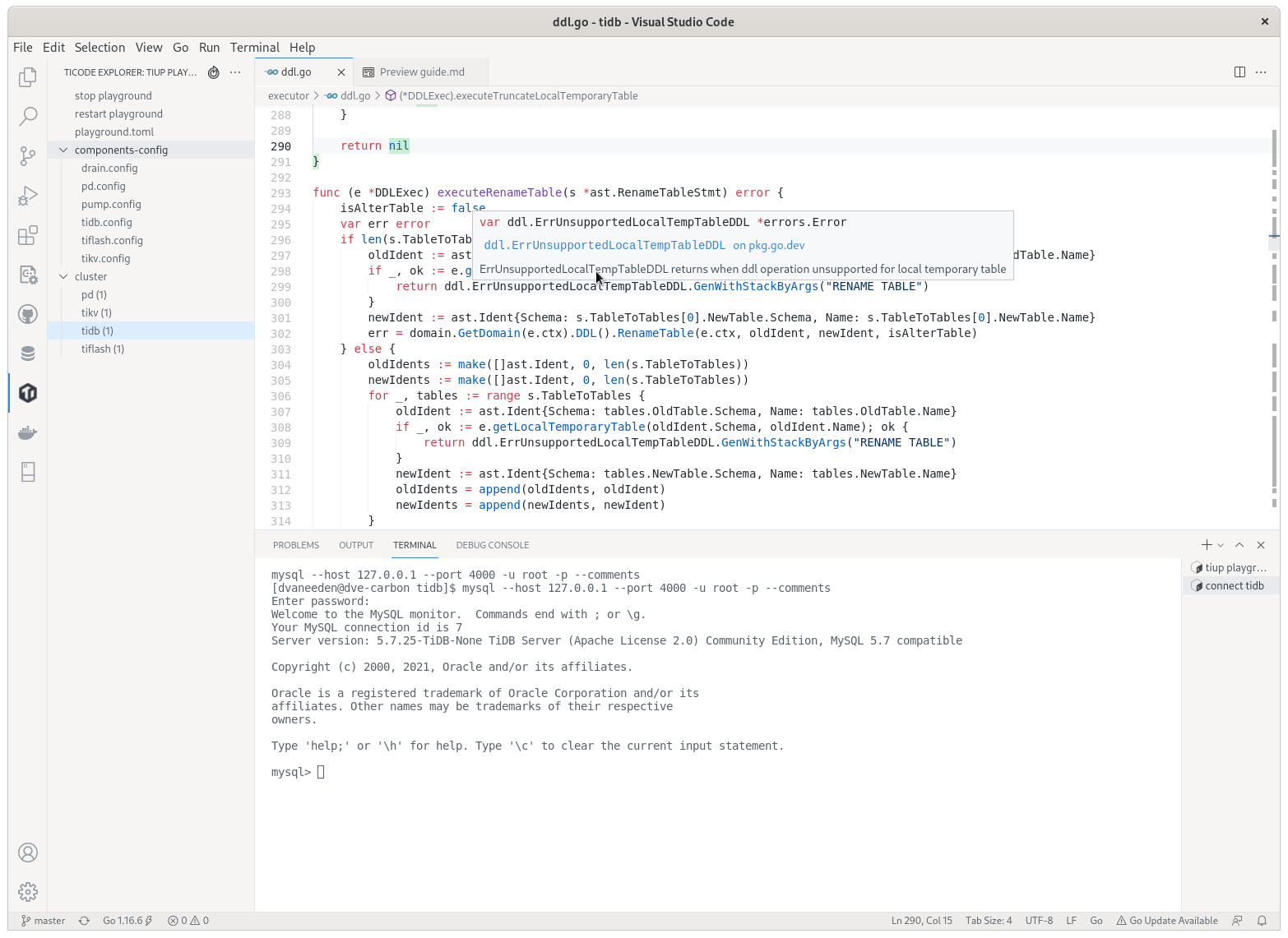

Visual Studio Code

VS Code is a generic IDE that has good extensions for working with Go and TiDB.

Prerequisites

go: TiDB is a Go project thus its building requires a workinggoenvironment. See the previous Install Golang section to prepare the environment.- TiDB source code: See the previous Get the code, build and run section to get the source code.

Download VS Code

Download VS Code from here and install it.

Now install these extensions:

Work with TiDB code in VS Code

Open the folder containing TiDB code via File→Open Folder. See the VS Code docs for how to edit and commit code.

There is detailed guide explaining how to use the TiDE extension.

Populate run configurations

Under the root directory of the TiDB source code, execute the following commands to add config files:

mkdir -p .vscode

echo "{

\"go.testTags\": \"intest,deadlock\"

}" > .vscode/settings.json

Write and run unit tests

The Golang testing framework provides several functionalities and conventions that help structure and execute tests efficiently. To enhance its assertion and mocking capabilities, we use the testify library.

You may find tests using pingcap/check which is a fork of go-check/check in release branches before

release-6.1, but since that framework is poorly maintained, we are migrated to testify fromrelease-6.1. You can check the background and progress on the migration tracking issue.

How to write unit tests

We use testify to write unit tests. Basically, it is out-of-the-box testing with testify assertions.

TestMain

When you run tests, Golang compiles each package along with any files with names with suffix _test.go. Thus, a test binary contains tests in a package.

Golang testing provides a mechanism to support doing extra setup or teardown before or after testing by writing a package level unique function:

func TestMain(m *testing.M)

After all tests finish, we leverage the function to detect Goroutine leaks by goleak.

Before you write any unit tests in a package, create a file named main_test.go and setup the scaffolding:

func TestMain(m *testing.M) {

goleak.VerifyTestMain(m)

}

You can also put global variables or helper functions of the test binary in this file.

Assertion

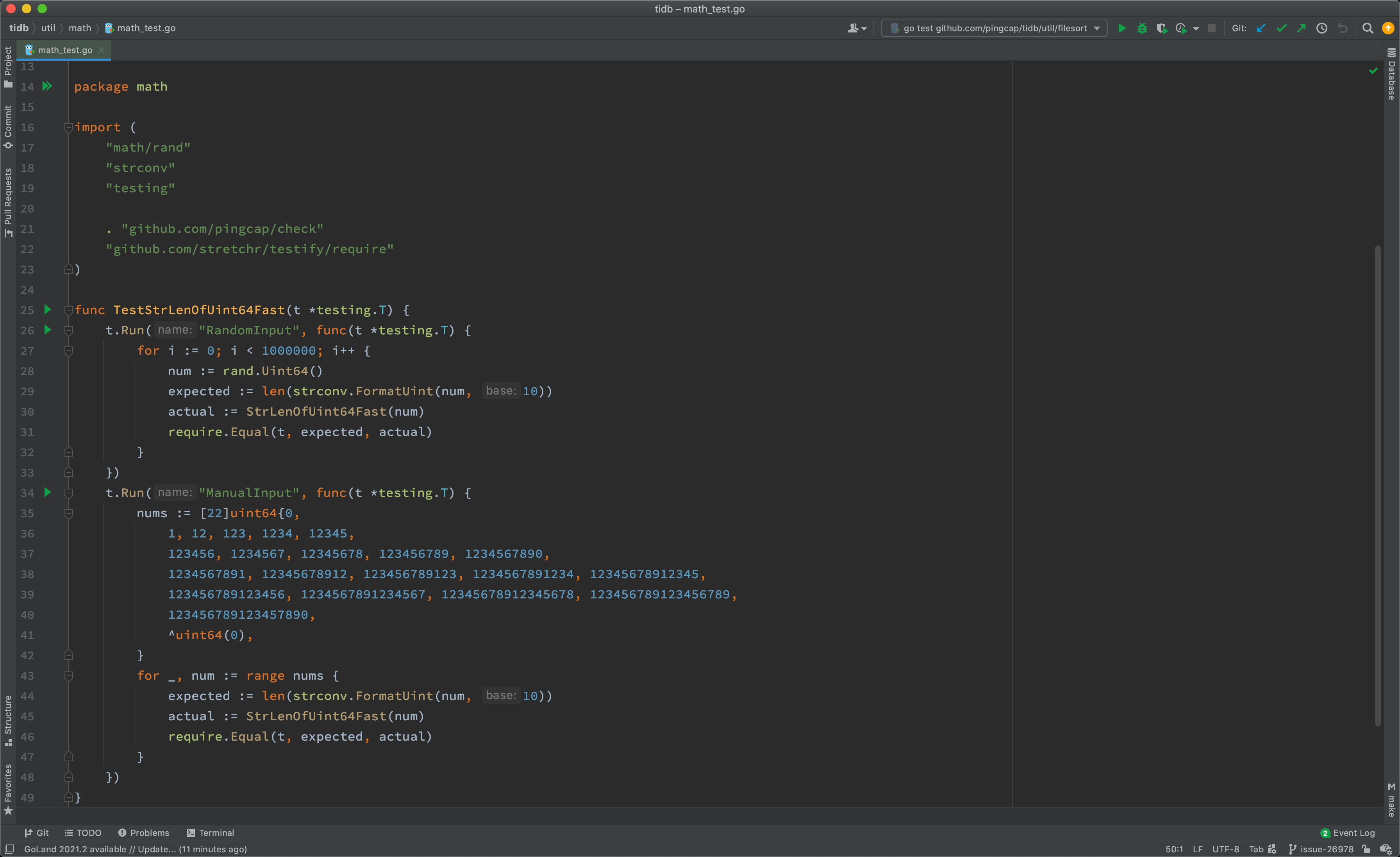

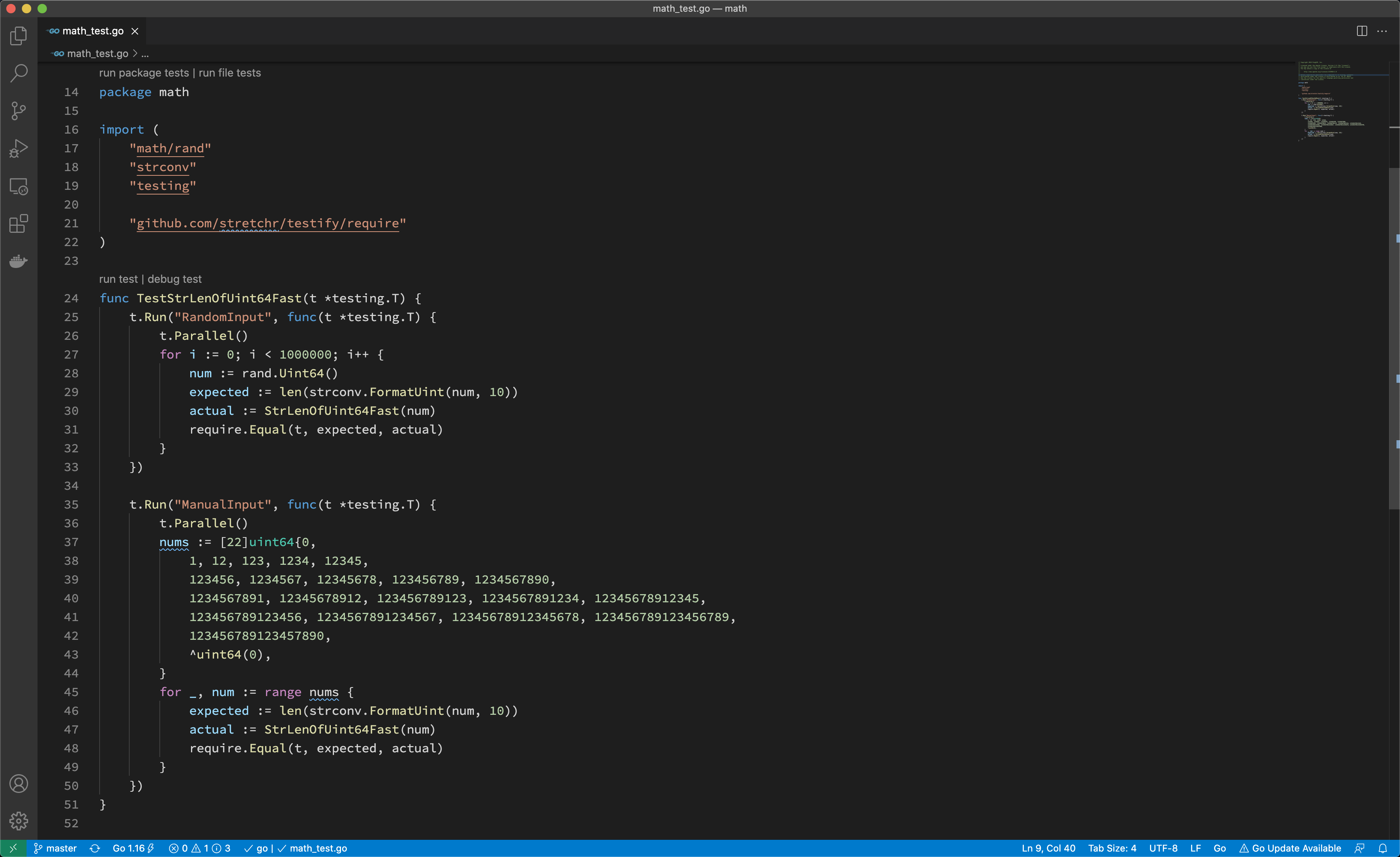

Let's write a basic test for the utility function StrLenOfUint64Fast:

func TestStrLenOfUint64Fast(t *testing.T) {

for i := 0; i < 1000000; i++ {

num := rand.Uint64()

expected := len(strconv.FormatUint(num, 10))

actual := StrLenOfUint64Fast(num)

require.Equal(t, expected, actual)

}

}

Golang testing detects test functions from *_test.go files of the form:

func TestXxx(*testing.T)

where Xxx does not start with a lowercase letter. The function name identifies the test routine.

We follow this pattern but use testify assertions instead of out-of-the-box methods, like Error or Fail, since they are too low level to use.

We mostly use require.Xxx for assertions, which is imported from github.com/stretchr/testify/require. If the assertions fail, the test fails immediately, and we tend to fail tests fast.

Below are the most frequently used assertions:

func Equal(t TestingT, expected interface{}, actual interface{}, msgAndArgs ...interface{})

func EqualValues(t TestingT, expected interface{}, actual interface{}, msgAndArgs ...interface{})

func Len(t TestingT, object interface{}, length int, msgAndArgs ...interface{})

func Nil(t TestingT, object interface{}, msgAndArgs ...interface{})

func NoError(t TestingT, err error, msgAndArgs ...interface{})

func NotNil(t TestingT, object interface{}, msgAndArgs ...interface{})

func True(t TestingT, value bool, msgAndArgs ...interface{})

You can find other assertions follow the documentation.

Parallel

Golang testing provides a method of testing.T to run tests in parallel:

t.Parallel()

We leverage this function to run tests as parallel as possible, so that we make full use of the available resource.

When some tests should be run in serial, use Golang testing subtests and parallel the parent test only. In this way, tests in the same subtests set run in serial.

func TestParent(t *testing.T) {

t.Parallel()

// <setup code>

t.Run("Serial 0", func(t *testing.T) { ... })

t.Run("Serial 1", func(t *testing.T) { ... })

t.Run("Serial 2", func(t *testing.T) { ... })

// <tear-down code>

}

Generally, if a test modifies global configs or fail points, it should be run in serial.

When writing parallel tests, there are several common considerations.

In Golang, the loop iterator variable is a single variable that takes different value in each loop iteration. Thus, when you run this code it is highly possible to see the last element used for every iteration. You may use below paradigm to work around.

func TestParallelWithRange(t *testing.T) {

for _, test := range tests {

// copy iterator variable into a new variable, see issue #27779 in tidb repo

test := test

t.Run(test.Name, func(t *testing.T) {

t.Parallel()

...

})

}

}

Test kits

Most of our tests are much more complex than what we describe above. For example, to set up a test, we may create a mock storage, a mock session, or even a local database instance.

These functions are known as test kits. Some are used in one package so we implement them in place; others are quite common so we move it to the testkit directory.

When you write complex unit tests, you may take a look at what test kits we have now and try to leverage them. If we don’t have a test kit for your issue and your issue is considered common, add one.

How to run unit tests

Running all tests

You can always run all tests by executing the ut (stands for unit test) target in Makefile:

make ut

This is almost equivalent to go test ./... but it enables and disables fail points before and after running tests.

pingcap/failpoint is an implementation of failpoints for Golang. A fail point is used to add code points where you can inject errors. Fail point is a code snippet that is only executed when the corresponding fail point is active.

Running a single test

To run a single test, you can manually repeat what make ut does and narrow the scope in one test or one package:

make failpoint-enable

cd pkg/domain

go test -v -run TestSchemaValidator # or with any other test flags

cd ../..

make failpoint-disable

or if it is an older test not using testify

make failpoint-enable

(cd pkg/planner/core ; go test -v -run "^TestT$" -check.f TestBinaryOpFunction )

make failpoint-disable

If one want to compile the test into a debug binary for running in a debugger, one can also use go test -gcflags="all=-N -l" -o ./t, which removes any optimisations and outputs a t binary file ready to be used, like dlv exec ./t or combine it with the above to only debug a single test dlv exec ./t -- -test.run "^TestT$" -check.f TestBinaryOpFunction.

Notice there is also an ut utility for running tests, see Makefile and tools/bin/ut.

To display information on all the test flags, enter go help testflag.

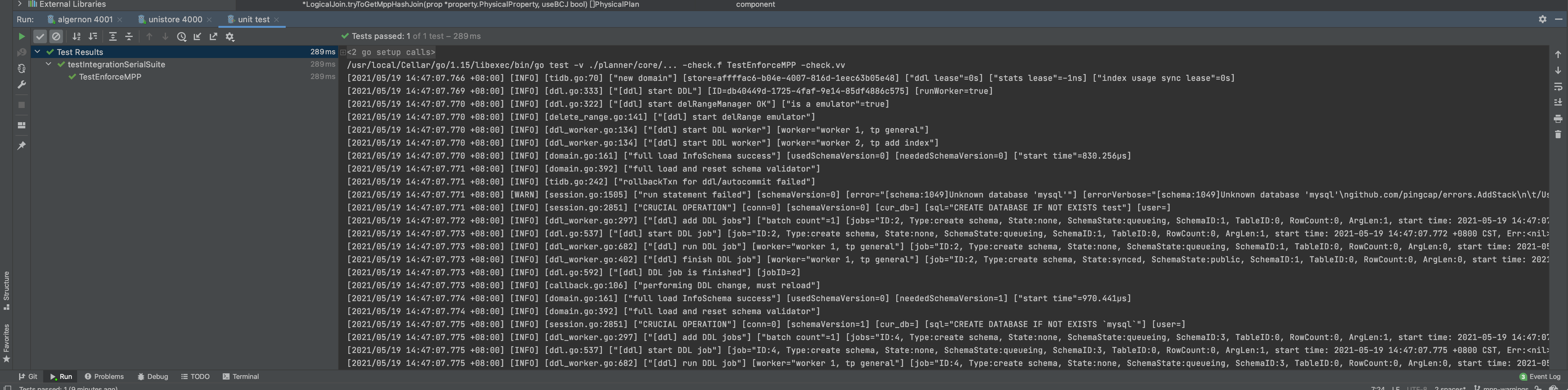

If you develop with GoLand, you can also run a test from the IDE with manually enabled and disabled fail points. See the documentation for details.

As shown above, you can run tests of the whole package, of a test, or of a subtest, by click the corresponding gutter icon.

If you develop with VS Code, you can also run a test from the editor with manually enabled and disabled fail points. See the documentation for details.

As shown above, you can run tests of the whole package, of a test, or of a file.

Running tests for a pull request

If you haven't joined the organization, you should wait for a member to comment with

/ok-to-testto your pull request.

Before you merge a pull request, it must pass all tests.

Generally, continuous integration (CI) runs the tests for you; however, if you want to run tests with conditions or rerun tests on failure, you should know how to do that, the rerun guide comment will be sent when the CI tests failed.

/retest

Rerun all failed CI test cases.

/test {{test1}} {{testN}}

Run given CI failed tests.

CI parameters

CI jobs accepts the following parameters passed from pull request title:

format: <origin pr title> | <the CI args pairs>

CI args pairs:

tikv=<branch>|<pr/$num>specifies which tikv to use.pd=<branch>|<pr/$num>specifies which pd to use.tidb-test=<branch>|<pr/$num>specifies which tidb-test to use.

For example:

pkg1: support xxx feature | tidb-test=pr/1234

pkg2: support yyy feature | tidb-test=release-6.5 tikv=pr/999

How to find failed tests

There are several common causes of failed tests.

Assertion failed

The most common cause of failed tests is that assertion failed. Its failure report looks like:

=== RUN TestTopology

info_test.go:72:

Error Trace: info_test.go:72

Error: Not equal:

expected: 1282967700000

actual : 1628585893

Test: TestTopology

--- FAIL: TestTopology (0.76s)

To find this type of failure, enter grep -i "FAIL" to search the report output.

Data race

Golang testing supports detecting data race by running tests with the -race flag. Its failure report looks like:

[2021-06-21T15:36:38.766Z] ==================

[2021-06-21T15:36:38.766Z] WARNING: DATA RACE

[2021-06-21T15:36:38.766Z] Read at 0x00c0055ce380 by goroutine 108:

...

[2021-06-21T15:36:38.766Z] Previous write at 0x00c0055ce380 by goroutine 169:

[2021-06-21T15:36:38.766Z] [failed to restore the stack]

Goroutine leak

We use goleak to detect goroutine leak for tests. Its failure report looks like:

goleak: Errors on successful test run: found unexpected goroutines:

[Goroutine 104 in state chan receive, with go.etcd.io/etcd/pkg/logutil.(*MergeLogger).outputLoop on top of the stack:

goroutine 104 [chan receive]:

go.etcd.io/etcd/pkg/logutil.(*MergeLogger).outputLoop(0xc000197398)

/go/pkg/mod/go.etcd.io/etcd@v0.5.0-alpha.5.0.20200824191128-ae9734ed278b/pkg/logutil/merge_logger.go:173 +0x3ac

created by go.etcd.io/etcd/pkg/logutil.NewMergeLogger

/go/pkg/mod/go.etcd.io/etcd@v0.5.0-alpha.5.0.20200824191128-ae9734ed278b/pkg/logutil/merge_logger.go:91 +0x85

To determine the source of package leaks, see the documentation

Timeout

After @tiancaiamao introduced the timeout checker for continuous integration, every test case should run in at most five seconds.

If a test case takes longer, its failure report looks like:

[2021-08-09T03:33:57.661Z] The following test cases take too long to finish:

[2021-08-09T03:33:57.661Z] PASS: tidb_test.go:874: tidbTestSerialSuite.TestTLS 7.388s

[2021-08-09T03:33:57.661Z] --- PASS: TestCluster (5.20s)

And for integration tests, please refer to Run and debug integration tests

Debug and profile

In this section, you will learn:

- How to debug TiDB

- How to pause the execution at any line of code to inspect values and stacks

- How to profile TiDB to catch a performance bottleneck

Use delve for debugging

Delve is a debugger for the Go programming language. It provides a command-line debugging experience similar to the GNU Project debugger (GDB), but it is much more Go native than GDB itself.

Install delve

To install delve, see the installation guide. After the installation, depending on how you set your environment variables, you will have an executable file named dlv in either $GOPATH/bin or $HOME/go/bin. You can then run the following command to verify the installation:

$ dlv version

Delve Debugger

Version: 1.5.0

Build: $Id: ca5318932770ca063fc9885b4764c30bfaf8a199 $

Attach delve to a running TiDB process

Once you get the TiDB server running, you can attach the delve debugger.

For example, you can build and run a standalone TiDB server by running the following commands in the root directory of the source code:

make server

./bin/tidb-server

You can then start a new shell and use ps or pgrep to find the PID of the tidb server process you just started:

pgrep tidb-server

# OUTPUT:

# 1394942

If the output lists multiple PIDs, it indicates that you might have multiple TiDB servers running at the same time. To determine the PID of the tidb server you are planning to debug, you can use commands such as ps $PID, where $PID is the PID you are trying to know more about:

ps 1394942

# OUTPUT:

# PID TTY STAT TIME COMMAND

# 1394942 pts/11 SNl 0:02 ./bin/tidb-server

Once you get the PID, you can attach delve to it by running the following command:

dlv attach 1394942

You might get error messages of the kernel security setting as follows:

Could not attach to pid 1394942: this could be caused by a kernel security setting, try writing "0" to /proc/sys/kernel/yama/ptrace_scope

To resolve the error, follow the instructions provided in the error message and execute the following command as the root user to override the kernel security setting:

echo 0 > /proc/sys/kernel/yama/ptrace_scope

Then retry attaching delve onto the PID, and it should work.

If you've worked with GDB, the delve debugging interface will look familiar to you. It is an interactive dialogue that allows you to interact with the execution of the tidb server attached on. To learn more about delve, you can type help into the dialogue and read the help messages.

Use delve for debugging

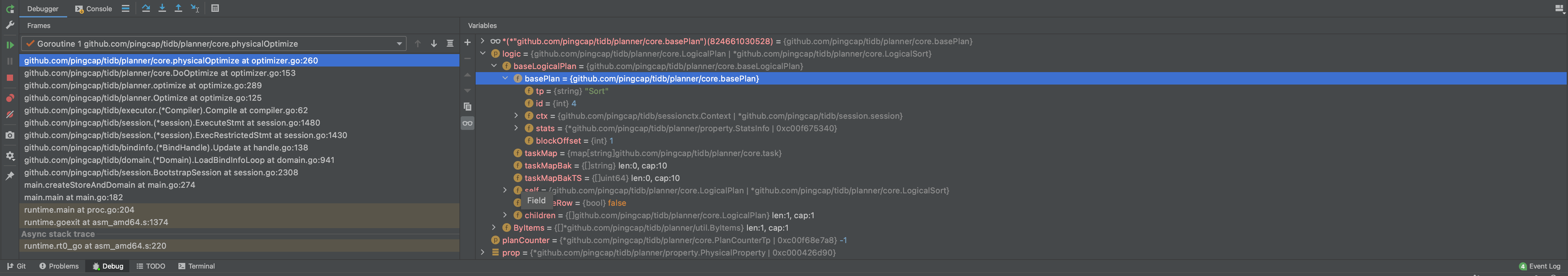

After attaching delve to the running TiDB server process, you can now set breakpoints. TiDB server will pause execution at the breakpoints you specify.

To create a breakpoint, you can write:

break [name] <linespec>

where [name] is the name for the breakpoint, and <linespec> is the position of a line of code in the source code. Note the name is optional.

For example, the following command creates a breakpoint at the Next function of HashJoinExec. (The line number can be subject to change due to the modification of the source code).

dlv debug tidb-server/main.go

# OUTPUT:

# Type 'help' for list of commands.

# (dlv) break executor/join.go:653

# Breakpoint 1 (enabled) set at 0x36752d8 for github.com/pingcap/tidb/executor.(*HashJoinExec).Next() ./executor/join.go:653

# (dlv)

Once the execution is paused, the context of the execution is fully preserved. You are free to inspect the values of different variables, print the calling stack, and even jump between different goroutines. Once you finish the inspection, you can resume the execution by stepping into the next line of code or continue the execution until the next breakpoint is encountered.

Typically, when you use a debugger, you need to take the following steps:

- Locate the code and set a breakpoint.

- Prepare data so that the execution will get through the breakpoint, and pause at the specified breakpoint as expected.

- Inspect values and follow the execution step by step.

Using delve to debug a test case

If a test case fails, you can also use delve to debug it. Get the name of the test case, go to the corresponding package directory, and then run the following command to start a debugging session that will stop at the entry of the test:

dlv test -- -run TestName

Understand how TiDB works through debugging

Besides debugging problems, you can also use the debugger to understand how TiDB works through tracking the execution step by step.

To understand TiDB internals, it's critical that you understand certain functions. To better understand how TiDB works, you can pause the execution of these TiDB functions, and then run TiDB step by step.

For example:

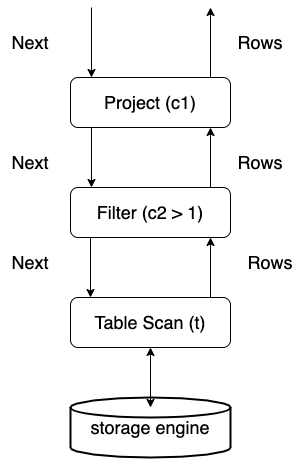

executor/compiler.go:Compileis where each SQL statement is compiled and optimized.planner/planner.go:Optimizeis where the SQL optimization starts.executor/adapter.go:ExecStmt.Execis where the SQL plan turns into executor and where the SQL execution starts.- Each executor's

Open,Next, andClosefunction marks the volcano-style execution logic.

When you are reading the TiDB source code, you are strongly encouraged to set a breakpoint and use the debugger to trace the execution whenever you are confused or uncertain about the code.

Using pprof for profiling

For any database system, performance is always important. If you want to know where the performance bottleneck is, you can use a powerful Go profiling tool called pprof.

Gather runtime profiling information through HTTP end points

Usually, when TiDB server is running, it exposes a profiling end point through HTTP at http://127.0.0.1:10080/debug/pprof/profile. You can get the profile result by running the following commands:

curl -G "127.0.0.1:10080/debug/pprof/profile?seconds=45" > profile.profile

go tool pprof -http 127.0.0.1:4001 profile.profile

The commands capture the profiling information for 45 seconds, and then provide a web view of the profiling result at 127.0.0.1:4001. This view contains a flame graph of the execution and more views that can help you diagnose the performance bottleneck.

You can also gather other runtime information through this end point. For example:

- Goroutine:

curl -G "127.0.0.1:10080/debug/pprof/goroutine" > goroutine.profile

- Trace:

curl -G "127.0.0.1:10080/debug/pprof/trace?seconds=3" > trace.profile

go tool trace -http 127.0.0.1:4001 trace.profile

- Heap:

curl -G "127.0.0.1:10080/debug/pprof/heap" > heap.profile

go tool pprof -http 127.0.0.1:4001 heap.profile

To learn how the runtime information is analyzed, see Go's diagnostics document.

Profiling during benchmarking

When you are proposing a performance-related feature for TiDB, we recommend that you also include a benchmark result as proof of the performance gain or to show that your code won't introduce any performance regression. In this case, you need to write your own benchmark test like in executor/benchmark.go.

For example, if you want to benchmark the window functions, because BenchmarkWindow are already in the benchmark tests, you can run the following commands to get the benchmark result:

cd executor

go test -bench BenchmarkWindow -run BenchmarkWindow -benchmem

If you find any performance regression, and you want to know the cause of it, you could use a command like the following:

go test -bench BenchmarkWindow -run BenchmarkWindow -benchmem -memprofile memprofile.out -cpuprofile profile.out

Then, you can use the steps described above to generate and analyze the profiling information.

Commit the code and submit a pull request

The TiDB project uses Git to manage its source code. To contribute to the project, you need to get familiar with Git features so that your changes can be incorporated into the codebase.

This section addresses some of the most common questions and problems that new contributors might face. This section also covers some Git basics; however if you find that the content is a little difficult to understand, we recommend that you first read the following introductions to Git:

- The "Beginner" and "Getting Started" sections of this tutorial from Atlassian

- Documentation and guides for beginners from Github

- A more in-depth book from Git

Prerequisites

Before you create a pull request, make sure that you've installed Git, forked pingcap/tidb, and cloned the upstream repo to your PC. The following instructions use the command line interface to interact with Git; there are also several GUIs and IDE integrations that can interact with Git too.

If you've cloned the upstream repo, you can reference it using origin in your local repo. Next, you need to set up a remote for the repo your forked using the following command:

git remote add dev https://github.com/your_github_id/tidb.git

You can check the remote setting using the following command:

git remote -v

# dev https://github.com/username/tidb.git (fetch)

# dev https://github.com/username/tidb.git (push)

# origin https://github.com/pingcap/tidb.git (fetch)

# origin https://github.com/pingcap/tidb.git (push)

Standard Process

The following is a normal procedure that you're likely to use for the most common minor changes and PRs:

-

Ensure that you're making your changes on top of master and get the latest changes:

git checkout master git pull master -

Create a new branch for your changes:

git checkout -b my-changes -

Make some changes to the repo and test them.

If the repo is buiding with Bazel tool, you should update the bazel files(*.bazel, DEPS.bzl) also.

-

Commit your changes and push them to your

devremote repository:# stage files you created/changed/deleted git add path/to/changed/file.go path/to/another/changed/file.go # commit changes staged, make sure the commit message is meaningful and readable git commit -s -m "pkg, pkg2, pkg3: what's changed" # optionally use `git status` to check if the change set is correct # git status # push the change to your `dev` remote repository git push --set-upstream dev my-changes -

Make a PR from your fork to the master branch of pingcap/tidb. For more information on how to make a PR, see Making a Pull Request in GitHub Guides.

When making a PR, look at the PR template and follow the commit message format, PR title format, and checklists.

After you create a PR, if your reviewer requests code changes, the procedure for making those changes is similar to that of making a PR, with some steps skipped:

-

Switch to the branch that is the head and get the latest changes:

git checkout my-changes git pull -

Make, stage, and commit your additional changes just like before.

-

Push those changes to your fork:

git push

If your reviewer requests for changes with GitHub suggestion, you can commit the suggestion from the webpage. GitHub provides documentation for this case.

Conflicts

When you edit your code locally, you are making changes to the version of pingcap/tidb that existed when you created your feature branch. As such, when you submit your PR it is possible that some of the changes that have been made to pingcap/tidb since then conflict with the changes you've made.

When this happens, you need to resolve the conflicts before your changes can be merged. First, get a local copy of the conflicting changes: checkout your local master branch with git checkout master, then git pull master to update it with the most recent changes.

Rebasing

You're now ready to start the rebasing process. Checkout the branch with your changes and execute git rebase master.

When you rebase a branch on master, all the changes on your branch are reapplied to the most recent version of master. In other words, Git tries to pretend that the changes you made to the old version of master were instead made to the new version of master. During this process, you should expect to encounter at least one "rebase conflict." This happens when Git's attempt to reapply the changes fails because your changes conflict with other changes that have been made. You can tell that this happened because you'll see lines in the output that look like:

CONFLICT (content): Merge conflict in file.go

When you open these files, you'll see sections of the form

<<<<<<< HEAD

Original code

=======

Your code

>>>>>>> 8fbf656... Commit fixes 12345

This represents the lines in the file that Git could not figure out how to rebase. The section between <<<<<<< HEAD and ======= has the code from master, while the other side has your version of the code. You'll need to decide how to deal with the conflict. You may want to keep your changes, keep the changes on master, or combine the two.

Generally, resolving the conflict consists of two steps: First, fix the particular conflict. Edit the file to make the changes you want and remove the <<<<<<<, =======, and >>>>>>> lines in the process. Second, check the surrounding code. If there was a conflict, it's likely there are some logical errors lying around too!

Once you're all done fixing the conflicts, you need to stage the files that had conflicts in them via git add. Afterwards, run git rebase --continue to let Git know that you've resolved the conflicts and it should finish the rebase.

Once the rebase has succeeded, you'll want to update the associated branch on your fork with git push --force-with-lease.

Advanced rebasing

If your branch contains multiple consecutive rewrites of the same code, or if the rebase conflicts are extremely severe, you can use git rebase --interactive master to gain more control over the process. This allows you to choose to skip commits, edit the commits that you do not skip, change the order in which they are applied, or "squash" them into each other.

Alternatively, you can sacrifice the commit history like this:

# squash all the changes into one commit so you only have to worry about conflicts once

git rebase -i $(git merge-base master HEAD) # and squash all changes along the way

git rebase master

# fix all merge conflicts

git rebase --continue

Squashing commits into each other causes them to be merged into a single commit. Both the upside and downside of this is that it simplifies the history. On the one hand, you lose track of the steps in which changes were made, but the history becomes easier to work with.

You also may want to squash together just the last few commits, possibly because they only represent "fixups" and not real changes. For example, git rebase --interactive HEAD~2 allows you to edit the two commits only.

Setting pre-commit

We use pre-commit to check the code style before committing. To install pre-commit, run:

# Using pip:

pip install pre-commit

# Using homebrew:

brew install pre-commit

After the installation is successful, run pre-commit install in the project root directory to enable git's pre-commit.

Contribute to TiDB

TiDB is developed by an open and friendly community. Everybody is cordially welcome to join the community and contribute to TiDB. We value all forms of contributions, including, but not limited to:

- Code reviewing of the existing patches

- Documentation and usage examples

- Community participation in forums and issues

- Code readability and developer guide

- We welcome contributions that add code comments and code refactor to improve readability

- We also welcome contributions to docs to explain the design choices of the internal

- Test cases to make the codebase more robust

- Tutorials, blog posts, talks that promote the project

Here are guidelines for contributing to various aspect of the project:

- Community Guideline

- Report an Issue

- Issue Triage

- Contribute Code

- Cherry-pick a Pull Request

- Review a Pull Request

- Make a Proposal

- Code Style and Quality Guide

- Write Document

- Release Notes Language Style Guide

- Committer Guide

- Miscellaneous Topics

Any other question? Reach out to the TiDB Internals forum to get help!

Community Guideline

TiDB community aims to provide harassment-free, welcome and friendly experience for everyone. The first and most important thing for any participant in the community is be friendly and respectful to others. Improper behaviors will be warned and punished.

We appreciate any contribution in any form to TiDB community. Thanks so much for your interest and enthusiasm on TiDB!

Code of Conduct

TiDB community refuses any kind of harmful behavior to the community or community members. Everyone should read our Code of Conduct and keep proper behavior while participating in the community.

Governance

TiDB development governs by two kind of groups:

- TOC: TOC serves as the main bridge and channel for coordinating and information sharing across companies and organizations. It is the coordination center for solving problems in terms of resource mobilization, technical research and development direction in the current community and cooperative projects.

- teams: Teams are persistent open groups that focus on a part of the TiDB projects. A team has its reviewer, committer and maintainer, and owns one or more repositories. Team level decision making comes from its maintainers.

A typical promoted path for a TiDB developer is from user to reviewer, then committer and maintainer, finally maybe a TOC member. But gaining more roles doesn't mean you have any privilege over other community members or even any right to control them. Everyone in TiDB community are equal and share the responsibility to collaborate constructively with other contributors, building a friendly community. The roles are a natural reward for your substantial contribution in TiDB development and provide you more rights in the development workflow to enhance your efficiency. Meanwhile, they request some additional responsibilities from you:

- Now that you are a member of team reviewers/committers/maintainers, you are representing the project and your fellow team members whenever you discuss TiDB with anyone. So please be a good person to defend the reputation of the team.

- Committers/maintainers have the right to approve pull requests, so bear the additional responsibility of handling the consequences of accepting a change into the codebase or documentation. That includes reverting or fixing it if it causes problems as well as helping out the release manager in resolving any problems found during the pre-release testing cycle. While all contributors are free to help out with this part of the process, and it is most welcome when they do, the actual responsibility rests with the committers/maintainers that approved the change.

- Reviewers/committers/maintainers also bear the primary responsibility for guiding contributors the right working procedure, like deciding when changes proposed on the issue tracker should be escalated to internal.tidb.io for wider discussion, as well as suggesting the use of the TiDB Design Documents process to manage the design and justification of complex changes, or changes with a potentially significant impact on end users.

There should be no blockers to contribute with no roles. It's totally fine for you to reject the promotion offer if you don't want to take the additional responsibilities or for any other reason. Besides, except for code or documentation contribution, any kind of contribution for the community is highly appreciated. Let's grow TiDB ecosystem through our contributions of this community.

Report an Issue

If you think you have found an issue in TiDB, you can report it to the issue tracker. If you would like to report issues to TiDB documents or this development guide, they are in separate GitHub repositories, so you need to file issues to corresponding issue tracker, TiDB document issue tracker and TiDB Development Guide issue tracker. Read Write Document for more details.

Checking if an issue already exists

The first step before filing an issue report is to see whether the problem has already been reported. You can use the search bar to search existing issues. This doesn't always work, and sometimes it's hard to know what to search for, so consider this extra credit. We won't mind if you accidentally file a duplicate report. Don't blame yourself if your issue is closed as duplicated. We highly recommend if you are not sure about anything of your issue report, you can turn to internal.tidb.io for a wider audience and ask for discussion or help.

Filing an issue

If the problem you're reporting is not already in the issue tracker, you can open a GitHub issue with your GitHub account. TiDB uses issue template for different kinds of issues. Issue templates are a bundle of questions to collect necessary information about the problem to make it easy for other contributors to participate. For example, a bug report issue template consists of four questions:

- Minimal reproduce step.

- What did you expect to see?

- What did you see instead?

- What is your TiDB version?

Answering these questions give the details about your problem so other contributors or TiDB users could pick up your issue more easily.

As previous section shows, duplicated issues should be reduced. To help others who encountered the problem find your issue, except for problem details answered in the issue template, a descriptive title which contains information that might be unique to it also helps. This can be the components your issue belongs to or database features used in your issue, the conditions that trigger the bug, or part of the error message if there is any.

Making good issues

Except for a good title and detailed issue message, you can also add suitable labels to your issue via /label, especially which component the issue belongs to and which versions the issue affects. Many committers and contributors only focus on certain subsystems of TiDB. Setting the appropriate component is important for getting their attention. Some issues might affect multiple releases. You can query Issue Triage chapter for more information about what need to do with such issues.

If you are able to, you should take more considerations on your issue:

- Does the feature fit well into TiDB's architecture? Will it scale and keep TiDB flexible for the future, or will the feature restrict TiDB in the future?

- Is the feature a significant new addition (rather than an improvement to an existing part)? If yes, will the community commit to maintaining this feature?

- Does this feature align well with currently ongoing efforts?

- Does the feature produce additional value for TiDB users or developers? Or does it introduce the risk of regression without adding relevant user or developer benefit?

Deep thoughts could help the issue proceed faster and help build your own reputation in the community.

Understanding the issue's progress and status

Once your issue is created, other contributors might take part in. You need to discuss with them, provide more information they might want to know, address their comments to reach consensus and make the progress proceeds. But please realize there are always more pending issues than contributors are able to handle, and especially TiDB community is a global one, contributors reside all over the world and they might already be very busy with their own work and life. Please be patient! If your issue gets stale for some time, it's okay to ping other participants, or take it to internal.tidb.io for more attention.

Disagreement with a resolution on the issue tracker

As humans, we will have differences of opinions from time to time. First and foremost, please be respectful that care, thought, and volunteer time went into the resolution.

With this in mind, take some time to consider any comments made in association with the resolution of the issue. On reflection, the resolution steps may seem more reasonable than you initially thought.

If you still feel the resolution is incorrect, then raise a thoughtful question on internal.tidb.io. Further argument and disrespectful discourse on internal.tidb.io after a consensus has been reached amongst the committers is unlikely to win any converts.

Reporting security vulnerabilities

Security issues are not suitable to report in public early, so different tracker strategy is used. Please refer to the dedicated process.

Issue Triage

TiDB uses an issue-centric workflow for development. Every problem, enhancement and feature starts with an issue. For bug issues, you need to perform some more triage operations on the issues.

Diagnose issue severity

The severity of a bug reflects the level of impact that the bug has on users when they use TiDB. The greater the impact, the higher severity the bug is. For higher severity bugs, we need to fix them faster. Although the impact of bugs can not be exhausted, they can be divided into four levels.

Critical

The bug affects critical functionality or critical data. It might cause huge losses to users and does not have a workaround. Some typical critical bugs are as follows:

- Invalid query result (correctness issues)

- TiDB returns incorrect results or results that are in the wrong order for a typical user-written query.

- Bugs caused by type casts.

- The parameters are not boundary value or invalid value, but the query result is not correct(except for overflow scenes).

- Incorrect DDL and DML result

- The data is not written to the disk, or wrong data is written.

- Data and index are inconsistent.

- Invalid features

- Due to a regression, the feature can not work in its main workflow

- Follower can not read follower.

- SQL hint does not work.

- SQL Plan

- Cannot choose the best index. The difference between best plan and chosen plan is bigger than 200%.

- DDL design

- DDL process causes data accuracy issue.

- Experimental feature

- If the issue leads to another stable feature’s main workflow not work, and may occur on released version, the severity is critical.

- If the issue leads to data loss, the severity is critical.

- Exceptions

- If the feature is clearly labeled as experimental, when it doesn’t work but doesn’t impact another stable feature’s main workflow or only impacts stable feature’s main workflow on master, the issue severity is major.

- The feature has been deprecated and a viable workaround is available(at most major).

- Due to a regression, the feature can not work in its main workflow

- System stability

- The system is unavailable for more than 5 minutes(if there are some system errors, the timing starts from failure recovery).

- Tools cannot perform replication between upstream and downstream for more than 1 minute if there are no system errors.

- TiDB cannot perform the upgrade operation.

- TPS/QPS dropped 25% without system errors or rolling upgrades.

- Unexpected TiKV core dump or TiDB panic(process crashed).

- System resource leak, include but not limit to memory leak and goroutine leak.

- System fails to recover from crash.

- Security and compliance issues

- CVSS score >= 9.0.

- TiDB leaks secure information to log files, or prints customer data when set to be desensitized.

- Backup or Recovery Issues

- Failure to either backup or restore is always considered critical.

- Incompatible Issues

- Syntax/compatibility issue affecting default install of tier 1 application(i.e. Wordpress).

- The DML result is incompatible with MySQL.

- CI test case fail

- Test cases which lead to CI failure and could always be reproduced.

- Bug location information

- Key information is missing in ERROR level log.

- No data is reported in monitor.

Major

The bug affects major functionality. Some typical critical bugs are as follow:

- Invalid query result

- The query gets the wrong result caused by overflow.

- The query gets the wrong result in the corner case.

- For boundary value, the processing logic in TiDB is inconsistent with MySQL.

- Inconsistent data precision.

- Incorrect DML or DDL result

- Extra or wrong data is written to TiDB with a DML in a corner case.

- Invalid features

- The corner case of the main workflow of the feature does not work.

- The feature is experimental, but a main workflow does not work.

- Incompatible issue of view functionality.

- SQL Plan

- Choose sub-optimal plan. The difference between best plan and chosen plan is bigger than 100% and less than 200%

- System stability

- TiDB panics but process does not exit.

- Less important security and compliance issues

- CVSS score >= 7.0

- Issues that affects critical functionality or critical data but rare to reproduce(can’t be reproduced in one week, and have no clear reproduce steps)

- CI test cases fail

- Test case is not stable.

- Bug location information

- Key information is missing in WARN level log.

- Data is not accurate in monitor.

Moderate

- SQL Plan

- Cannot get the best plan due to invalid statistics.

- Documentation issues

- The bugs were caused by invalid parameters which rarely occurred in the product environment.

- Security issues

- CVSS score >= 4.0

- Incompatible issues occurred on boundary value

- Bug location information

- Key information is missing in DEBUG/INFO level log.

Minor

The bug does not affect functionality or data. It does not even need a workaround. It does not impact productivity or efficiency. It is merely an inconvenience. For example:

- Invalid notification

- Minor compatibility issues

- Error message or error code does not match MySQL.

- Issues caused by invalid parameters or abnormal cases.

Not a bug

The following issues look like bugs but actually not. They should not be labeled type/bug and instead be only labeled type/compatibility:

- Behavior is different from MySQL, but could be argued as correct.

- Behavior is different from MySQL, but MySQL behavior differs between 5.7 and 8.0.

Identify issue affected releases

For type/bug issues, when they are created and identified as severity/critical or severity/major, the ti-chi-bot will assign a list of may-affects-x.y labels to the issue. For example, currently if we have version 5.0, 5.1, 5.2, 5.3, 4.0 and the in-sprint 5.4, when a type/bug issue is created and added label severity/critical or severity/major, the ti-chi-bot will add label may-affects-4.0, may-affects-5.0, may-affects-5.1, may-affects-5.2, and may-affects-5.3. These labels mean that whether the bug affects these release versions are not yet determined, and is awaiting being triaged. You could check currently maintained releases list for all releases.

When a version is triaged, the triager needs to remove the corresponding may-affects-x.y label. If the version is affected, the triager needs to add a corresponding affects-x.y label to the issue and in the meanwhile the may-affects-x.y label can be automatically removed by the ti-chi-bot, otherwise the triager can simply remove the may-affects-x.y label. So when a issue has a label may-affects-x.y, this means the issue has not been diagnosed on version x.y. When a issue has a label affects-x.y, this means the issue has been diagnosed on version x.y and identified affected. When both the two labels are missing, this means the issue has been diagnosed on version x.y but the version is not affected.

The status of the affection of a certain issue can be then determined by the combination of the existence of the corresponding may-affects-x.y and affects-x.y labels on the issue, see the table below for a clearer illustration.

| may-affects-x.y | affects-x.y | status |

|---|---|---|

| YES | NO | version x.y has not been diagnosed |

| NO | NO | version x.y has been diagnosed and identified as not affected |

| NO | YES | version x.y has been diagnosed and identified as affected |

| YES | YES | invalid status |

Contribute Code

TiDB is maintained, improved, and extended by code contributions. We welcome code contributions to TiDB. TiDB uses a workflow based on pull requests.

Before contributing

Contributing to TiDB does not start with opening a pull request. We expect contributors to reach out to us first to discuss the overall approach together. Without consensus with the TiDB committers, contributions might require substantial rework or will not be reviewed. So please create a GitHub issue, discuss under an existing issue, or create a topic on the internal.tidb.io and reach consensus.

For newcomers, you can check the starter issues, which are annotated with a "good first issue" label. These are issues suitable for new contributors to work with and won't take long to fix. But because the label is typically added at triage time it can turn out to be inaccurate, so do feel free to leave a comment if you think the classification no longer applies.

To get your change merged you need to sign the CLA to grant PingCAP ownership of your code.

Contributing process

After a consensus is reached in issues, it's time to start the code contributing process:

- Assign the issue to yourself via /assign. This lets other contributors know you are working on the issue so they won't make duplicate efforts.

- Follow the GitHub workflow, commit code changes in your own git repository branch and open a pull request for code review.

- Make sure the continuous integration checks on your pull request are green (i.e. successful).

- Review and address comments on your pull request. If your pull request becomes unmergeable, you need to rebase your pull request to keep it up to date. Since TiDB uses squash and merge, simply merging master to catch up the change is also acceptable.

- When your pull request gets enough approvals (the default number is 2) and all other requirements are met, it will be merged.

- Handle regressions introduced by your change. Although committers bear the main responsibility to fix regressions, it's quite nice for you to handle it (reverting the change or sending fixes).

Clear and kind communication is key to this process.

Referring to an issue

Code repositories in TiDB community require ALL the pull requests referring to its corresponding issues. In the pull request body, there MUST be one line starting with Issue Number: and linking the relevant issues via the keyword, for example:

If the pull request resolves the relevant issues, and you want GitHub to close these issues automatically after it merged into the default branch, you can use the syntax (KEYWORD #ISSUE-NUMBER) like this:

Issue Number: close #123

If the pull request links an issue but does not close it, you can use the keyword ref like this:

Issue Number: ref #456

Multiple issues should use full syntax for each issue and separate by a comma, like:

Issue Number: close #123, ref #456

For pull requests trying to close issues in a different repository, contributors need to first create an issue in the same repository and use this issue to track.

If the pull request body does not provide the required content, the bot will add the do-not-merge/needs-linked-issue label to the pull request to prevent it from being merged.

Writing tests

One important thing when you make code contributions to TiDB is tests. Tests should be always considered as a part of your change. Any code changes that cause semantic changes or new function additions to TiDB should have corresponding test cases. And of course you can not break any existing test cases if they are still valid. It's recommended to run tests on your local environment first to find obvious problems and fix them before opening the pull request.

It's also highly appreciated if your pull request only contains test cases to increase test coverage of TiDB. Supplement test cases for existing modules is a good and easy way to become acquainted with existing code.

Making good pull requests

When creating a pull request for submission, there are several things that you should consider to help ensure that your pull request is accepted:

- Does the contribution alter the behavior of features or components in a way that it may break previous users' programs and setups? If yes, there needs to be a discussion and agreement that this change is desirable.

- Does the contribution conceptually fit well into TiDB? Is it too much of a special case such that it makes things more complicated for the common case, or bloats the abstractions/APIs?

- Does the contribution make a big impact on TiDB's build time?

- Does your contribution affect any documentation? If yes, you should add/change proper documentation.

- If there are any new dependencies, are they under active maintenances? What are their licenses?

Making good commits

Each feature or bugfix should be addressed by a single pull request, and for each pull request there may be several commits. In particular:

- Do not fix more than one issues in the same commit (except, of course, if one code change fixes all of them).

- Do not do cosmetic changes to unrelated code in the same commit as some feature/bugfix.

Waiting for review

To begin with, please be patient! There are many more people submitting pull requests than there are people capable of reviewing your pull request. Getting your pull request reviewed requires a reviewer to have the spare time and motivation to look at your pull request. If your pull request has not received any notice from reviewers (i.e., no comment made) for some time, you can ping the reviewers and assignees, or take it to internal.tidb.io for more attention.

When someone does manage to find the time to look at your pull request, they will most likely make comments about how it can be improved (don't worry, even committers/maintainers have their pull requests sent back to them for changes). It is then expected that you update your pull request to address these comments, and the review process will thus iterate until a satisfactory solution has emerged.

Cherry-pick a Pull Request

TiDB uses release train model and has multiple releases. Each release matches one git branch. For type/bug issues with severity/critical and severity/major, it is anticipated to be fixed on any currently maintained releases if affected. Contributors and reviewers are responsible to settle the affected versions once the bug is identified as severity/critical or severity/major. Cherry-pick pull requests shall be created to port the fix to affected branches after the original pull request merged. While creating cherry-pick pull requests, bots in TiDB community could help lighten your workload.

What kind of pull requests need to cherry-pick?

Because there are more and more releases of TiDB and limits of developer time, we are not going to cherry-pick every pull request. Currently, only problems with severity/critical and severity/major are candidates for cherry-pick. There problems shall be solved on all affected maintained releases. Check Issue Triage chapter for severity identification.

Create cherry-pick pull requests automatically

Typically, TiDB repos use ti-chi-bot to help contributors create cherry-pick pull requests automatically.

ti-chi-bot creates corresponding cherry-pick pull requests according to the needs-cherry-pick-<release-branch-name> on the original pull request once it's merged. If there is any failure or omission, contributors could run /cherry-pick <release-branch-name> to trigger cherry-pick for a specific release.

Create cherry-pick pull requests manually

Contributors could also create cherry-pick pull requests manually if they want. git cherry-pick is a good command for this. The requirements in Contribute Code also apply here.

Pass triage complete check

For pull requests, check-issue-triage-complete checker will first check whether the corresponding issue has any type/xx label, if not, the checker fails. Then for issues with type/bug label, there must also exist a severity/xx label, otherwise, the checker fails. For type/bug issue with severity/critical or severity/major label, the checker checks if there is any may-affects-x.y label, which means the issue has not been diagnosed on all needed versions. If there is, the pull request is blocked and not able to be merged. So in order to merge a bugfix pull request into the target branch, every other effective version needs to first be diagnosed. TiDB maintainer will add these labels.

ti-chi-bot will automatically trigger the checker to run on the associated PR by listening to the labeled/unlabeled event of may-affects-x.y labels on bug issues, contributors also could comment /check-issue-triage-complete or /run-check-issue-triage-complete like other checkers to rerun the checker manually and update the status. Once check-issue-triage-complete checker passes, ti-chi-bot will add needs-cherry-pick-<release-version>/needs-cherry-pick-<release-branch-name> labels to pull requests according to the affects-x.y labels on the corresponding issues.

In addition, if the checker fails, the robot will add the do-not-merge/needs-triage-completed label to the pull request at the same time, which will be used by other plugins like tars.

Review cherry-pick pull requests

Cherry-pick pull requests obey the same review rules as other pull requests. Besides the merge requirements as normal pull requests, cherry-pick pull requests are added do-not-merge/cherry-pick-not-approved label initially. To get it merged, it needs an additional cherry-pick-approved label from team qa-release-merge.

Troubleshoot cherry-pick

- If there is any error in the cherry-pick process, for example, the bot fails to create some cherry-pick pull requests. You could ask reviewers/committers/maintainers for help.

- If there are conflicts in the cherry-pick pull requests. You must resolve the conflicts to get pull requests merged. Some ways can solve it:

- Request privileges to the forked repo by sending

/cherry-pick-invitecomment in the cherry-pick pull request if you are a member of the orgnization. When you accepted the invitaion, you could directly push to the pull request branch. - Ask committers/maintainers to do that for you if you are not a member of the orgnization.

- Manually create a new cherry-pick pull request for the branch.

- Request privileges to the forked repo by sending

Review a Pull Request

TiDB values any code review. One of the bottlenecks in the TiDB development process is the lack of code reviews. If you browse the issue tracker, you will see that numerous issues have a fix, but cannot be merged into the main source code repository, because no one has reviewed the proposed solution. Reviewing a pull request can be just as informative as providing a pull request and it will allow you to give constructive comments on another developer's work. It is a common misconception that in order to be useful, a code review has to be perfect. This is not the case at all! It is helpful to just test the pull request and/or play around with the code and leave comments in the pull request.

Principles of the code review

- Technical facts and data overrule opinions and personal preferences.

- Software design is about trade-offs, and there is no silver bullet.

Everyone comes from different technical backgrounds with different knowledge. They have their own personal preferences. It is important that the code review is not based on biased opinions.

Sometimes, making choices of accepting or rejecting a pull request can be tricky as in the following situations:

- Suppose that a pull request contains special optimization that can improve the overall performance by 30%. However, the pull request introduces a totally different code path, and every subsequent feature must consider it.

- Suppose that a pull request is to fix a critical bug, but the change in the pull request is risky to introduce other bugs.

If a pull request under your review is in these tricky situations, what is the right choice, accepting the pull request or rejecting it? The answer is always "it depends." Software design is more like a kind of art than technology. It is about aesthetics and your taste of the code. There are always trade-offs, and often there's no perfect solution.

Triaging pull requests

Some pull request authors may not be familiar with TiDB, TiDB development workflow or TiDB community. They don't know what labels should be added to the pull requests and which experts could be asked for review. If you are able to, it would be great for you to triage the pull requests, adding suitable labels to the pull requests, asking corresponding experts to review the pull requests. These actions could help more contributors notice the pull requests and make quick responses.

Checking pull requests

There are some basic aspects to check when you review a pull request:

- Concentration. One pull request should only do one thing. No matter how small it is, the change does exactly one thing and gets it right. Don't mix other changes into it.

- Tests. A pull request should be test covered, whether the tests are unit tests, integration tests, or end-to-end tests. Tests should be sufficient, correct and don't slow down the CI pipeline largely.

- Functionality. The pull request should implement what the author intends to do and fit well in the existing code base, resolve a real problem for TiDB users. To get the author's intention and the pull request design, you could follow the discussions in the corresponding GitHub issue or internal.tidb.io topic.

- Style. Code in the pull request should follow common programming style. For Go and Rust, there are built-in tools with the compiler toolchain. However, sometimes the existing code is inconsistent with the style guide, you should maintain consistency with the existing code or file a new issue to fix the existing code style first.

- Documentation. If a pull request changes how users build, test, interact with, or release code, you must check whether it also updates the related documentation such as READMEs and any generated reference docs. Similarly, if a pull request deletes or deprecates code, you must check whether or not the corresponding documentation should also be deleted.

- Performance. If you find the pull request may affect performance, you could ask the author to provide a benchmark result.

Writing code review comments

When you review a pull request, there are several rules and suggestions you should take to write better comments:

- Be respectful to pull request authors and other reviewers. Code review is a part of your community activities. You should follow the community requirements.

- Asking questions instead of making statements. The wording of the review comments is very important. To provide review comments that are constructive rather than critical, you can try asking questions rather than making statements.

- Offer sincere praise. Good reviewers focus not only on what is wrong with the code but also on good practices in the code. As a reviewer, you are recommended to offer your encouragement and appreciation to the authors for their good practices in the code. In terms of mentoring, telling the authors what they did is right is even more valuable than telling them what they did is wrong.

- Provide additional details and context of your review process. Instead of simply "approving" the pull request. If you test the pull request, report the result and your test environment details. If you request changes, try to suggest how.

Accepting pull requests

Once you think the pull request is ready, you can approve it, commenting with /lgtm is also valid.

In the TiDB community, most repositories require two approvals before a pull request can be accepted. A few repositories require a different number of approvals, but two approvals are the default setting. After the required lgtm count is met, lgtm label will be added.

Finally committer can /approve the pull request, some special scopes need /approve by the scope approvers(define in OWNERS files).

Make a Proposal

This page defines the best practices procedure for making a proposal in TiDB projects. This text is based on the content of TiDB Design Document.

Motivation

Many changes, including bug fixes and documentation improvements can be implemented and reviewed via the normal GitHub pull request workflow.

Some changes though are "substantial", and we ask that these be put through a bit of a design process and produce a consensus among the TiDB community.

The process described in this page is intended to provide a consistent and controlled path for new features to enter the TiDB projects, so that all stakeholders can be confident about the direction the projects is evolving in.

Who should initiate the design document?

Everyone is encouraged to initiate a design document, but before doing it, please make sure you have an intention of getting the work done to implement it.

Before creating a design document

A hastily-proposed design document can hurt its chances of acceptance. Low-quality proposals, proposals for previously-rejected features, or those that don't fit into the near-term roadmap, may be quickly rejected, which can be demotivating for the unprepared contributor. Laying some groundwork ahead of the design document can make the process smoother.

Although there is no single way to prepare for submitting a design document, it is generally a good idea to pursue feedback from other project developers beforehand, to ascertain that the design document may be desirable; having a consistent impact on the project requires concerted effort toward consensus-building.

The most common preparations for writing and submitting a draft of design document is on the TiDB Internals forum.

What is the process?

- Create an issue describing the problem, goal and solution.

- Get responses from other contributors to see if the proposal is generally acceptable.

- Create a pull request with a design document based on the template as

YYYY-MM-DD-my-feature.md. - Discussion takes place, and the text is revised in response.

- The design document is accepted or rejected when at least two committers reach consensus and no objection from the committer.

- If accepted, create a tracking issue for the design document or convert one from a previous discuss issue. The tracking issue basically tracks subtasks and progress. And refer the tracking issue in the design document replacing placeholder in the template.

- Merge the pull request of design.

- Start the implementation.

Please refer to the tracking issue from subtasks to track the progress.

An example that almost fits into this model is the proposal "Support global index for partition table", without following the latest template.

TiDB Code Style and Quality Guide

This is an attempt to capture the code and quality standard that we want to maintain.

The newtype pattern improves code quality

We can create a new type using the type keyword.

The newtype pattern is perhaps most often used in Golang to get around type restrictions rather than to try to create new ones. It is used to create different interface implementations for a type or to extend a builtin type or a type from an existing package with new methods.

However, it is generally useful to improve code clarity by marking that data has gone through either a validation or a transformation. Using a different type can reduce error handling and prevent improper usage.

package main

import (

"fmt"

"strings"

)

type Email string

func newEmail(email string) (Email, error) {

if !strings.Contains(email, "@") {

return Email(""), fmt.Errorf("Expected @ in the email")

}

return Email(email), nil

}

func (email Email) Domain() string {

return strings.Split(string(email), "@")[1]

}

func main() {

ping, err := newEmail("go@pingcap.com")

if err != nil { panic(err) }

fmt.Println(ping.Domain())

}

When to use value or pointer receiver

Because pointer receivers need to be used some of the time, Go programmers often use them all of the time. This is a typical outline of Go code:

type struct S {}

func NewStruct() *S

func (s *S) structMethod()

Using pointers for the entire method set means we have to read the source code of every function to determine if it mutates the struct. Mutations are a source of error. This is particularly true in concurrent programs. We can contrast this with values: these are always concurrent safe.

For code clarity and bug reduction a best practice is to default to using values and value receivers. However, pointer receivers are often required to satisfy an interface or for performance reasons, and this need overrides any default practice.

However, performance can favor either approach. One might assume that pointers would always perform better because it avoids copying. However, the performance is roughly the same for small structs in micro benchmark. This is because the copying is cheap, inlining can often avoid copying anyways, and pointer indirection has its own small cost. In a larger program with a goal of predictable low latency the value approach can be more favorable because it avoids heap allocation and any additional GC overhead.

As a rule of thumb is that when a struct has 10 or more words we should use pointer receivers. However, to actually know which is best for performance depends on how the struct is used in the program and must ultimately be determined by profiling. For example these are some factors that affect things:

- method size: small inlineable methods favor value receivers.

- Is the struct called repeatedly in a for loop? This favors pointer receivers.

- What is the GC behavior of the rest of the program? GC pressure may favor value receivers.

Parallel For-Loop

There are two types of for loop on range: "with index" and "without index". Let's see an example for range with index.

func TestRangeWithIndex(t *testing.T) {

rows := []struct{ index int }{{index: 0}, {index: 1}, {index: 2}}

for _, row := range rows {

row.index += 10

}

for i, row := range rows {

require.Equal(t, i+10, row.index)

}

}

the output is:

Error Trace: version_test.go:39

Error: Not equal:

expected: 10

actual : 0

Test: TestShowRangeWithIndex

Test fails because when range with index, the loop iterator variable is the same instance of the variable with a clone of iteration target value.

The same instance of the variable

Since the the loop iterator variable is the same instance of the variable, it may result in tricky error with parallel for-loop.

done := make(chan bool)

values := []string{"a", "b", "c"}

for _, v := range values {

go func() {

fmt.Println(v)

done <- true

}()

}

for _ = range values {

<-done

}

You might expect to see a, b, c as the output, but you'll probably see instead is c, c, c.

This is because each iteration of the loop uses the same instance of the variable v, so each closure shares that single variable.

This is the same reason which result wrong test when use t.Parallel() with range, which is covered in Parallel section of Write and run unit tests

A clone of iteration target value

Since the loop iterator variable is a clone of iteration target value, it may result in logic error. It can also lead to performance issue compared with none-index range loop or bare for loop.

type Item struct {

id int

value [1024]byte

}

func BenchmarkRangeIndexStruct(b *testing.B) {

var items [1024]Item

for i := 0; i < b.N; i++ {

var tmp int

for k := range items {

tmp = items[k].id

}

_ = tmp

}

}

func BenchmarkRangeStruct(b *testing.B) {

var items [1024]Item

for i := 0; i < b.N; i++ {

var tmp int

for _, item := range items {

tmp = item.id

}

_ = tmp

}

}

BenchmarkRangeIndexStruct-12 4875518 246.0 ns/op

BenchmarkRangeStruct-12 16171 77523 ns/op

You can see range with index is much slower than range without index, since range with index use cloned value so have big performance decrease if cloned value use lots of memory.

Write Document

Good documentation is crucial for any kind of software. This is especially true for sophisticated software systems such as distributed database like TiDB. The TiDB community aims to provide concise, precise, and complete documentation and welcomes any contribution to improve TiDB's documentation.

Where you can contribute

The TiDB community provides bilingual documentation. The English documentation is maintained in the pingcap/docs repository (docs repo) and the Chinese documentation is maintained in the pingcap/docs-cn repository (docs-cn repo). You are welcome to contribute to either of the repositories.

In addition, you are also welcome to contribute to the TiDB Operator documentation.

This guide walks you through what and how you can contribute to the TiDB bilingual documentation in docs-cn and docs repos.

What you can contribute

You can start from any one of the following items to help improve the TiDB English documentation or Chinese documentation.

- Fix typos or format (punctuation, space, indentation, code block, etc.)

- Fix or update inappropriate or outdated descriptions

- Add missing content (sentence, paragraph, or a new document)

- Translate docs changes from English to Chinese, or from Chinese to English. See How we implement bilingual documentation

- Submit, reply to, and resolve docs issues or docs-cn issues

- (Advanced) Review Pull Requests created by others